(Image from Unsplash)

On 13th March, EU lawmakers approved the EU AI Act in an overwhelming majority (523 votes in favour of the AI Act, 46 against, and 49 abstentions). The first ever legal framework on artificial intelligence, the act focuses on implementing a risk-based approach to regulating artificial intelligence (AI) and its use.

But what is the AI Act? In this article, we break it all down, outlining its key features, the new requirements it sets, and how consumers and developers of artificial intelligence alike will be affected.

What is the EU AI Act?

The EU AI Act, legislated by the European Union, will establish a comprehensive framework for the regulation of artificial intelligence within the EU.

The act also establishes the European Artificial Intelligence Board as an independent regulatory body responsible for overseeing compliance with the regulation, coordinating enforcement actions, and providing guidance on AI-related issues.

In whole, this ambitious legislation seeks to address various aspects of AI deployment, including its ethical implications, accountability, and potential risks. Importantly, developers located outside the EU who develop, sell, or use AI in the EU market will also have to conform to the regulations outlined in the AI Act. This includes developers from countries such as the UK and the USA.

So, what are some of the Act’s main features?

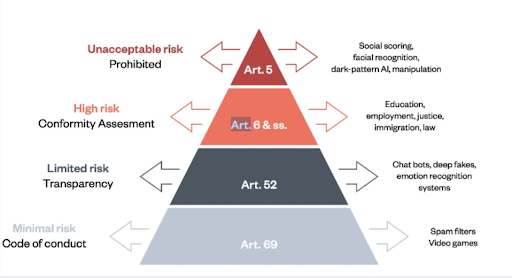

The four bands of risk in the EU AI ACT

The Act splits AI into four different bands of risk based on the intended use of a system. Of these four categories, the Act is most concerned with ‘high-risk AI’.

Prohibited AI

The legislation prohibits the following uses of AI, as they are deemed practices that threaten fundamental rights or manipulate individuals’ behaviour in a harmful manner.

- Social credit scoring systems

- Emotion recognition systems at work and in education

- AI used to exploit people’s vulnerabilities (e.g., age, disability)

- Behavioural manipulation and circumvention of free will

- Untargeted scraping of facial images for facial recognition

- Biometric categorisation systems using sensitive characteristics

- Specific predictive policing applications

- Law enforcement use of real-time biometric identification in public (apart from in limited, pre-authorised situations)

High-risk AI

The uses classified as “high-risk” AI are:

- Medical devices

- Vehicles

- Recruitment, HR and worker management

- Education and vocational training

- Influencing elections and voters

- Access to services (e.g., insurance, banking, credit, benefits etc.)

- Critical infrastructure management (e.g., water, gas, electricity etc.)

- Emotion recognition systems

- Biometric identification

- Law enforcement, border control, migration and asylum

- Administration of justice

- Specific products and/or safety components of specific products

Key requirements regarding high-risk AI

The following will be required for AI to be classified as “high-risk”.

- Fundamental rights impact assessment and conformity assessment

- Registration in public EU database for high-risk AI systems

- Implement risk management and quality management system

- Data governance (e.g., bias mitigation, representative training data etc.)

- Transparency (e.g., Instructions for Use, technical documentation etc.)

- Human oversight (e.g., explainability, auditable logs, human-in-the-loop etc.)

- Accuracy, robustness and cyber security (e.g. testing and monitoring)

Undertaking assessments

As per one of the above listed requirements, developers of high-risk AI systems will be obligated to undertake a conformity assessment to ensure that their AI products meet rigorous standards. This means an assessment that ensures the AI product complies with the requirements set forth in the AI Act.

Upon successful completion of the assessment, the product will receive a “C” certificate, similar to the certifications found on the back of most appliances, indicating that it meets the required standards.

In addition to conformity assessments, developers will also be required to undertake a data protection impact assessment (DPIA). This assessment evaluates the potential impact of the AI system on individuals’ data privacy and ensures that appropriate measures are in place to protect personal data.

What does the EU AI act mean for us (and our customers)?

Enhanced protection

The EU AI Act is designed to enhance consumer protection by mitigating the risks associated with AI technologies. By imposing strict requirements on high-risk AI systems, the legislation aims to safeguard individual rights and privacy.

Increased transparency and accountability

Consumers can expect greater transparency regarding the use of AI in products and services. Companies will be obligated to disclose information about the AI systems they employ, helping consumers make informed choices and understand the implications of AI-driven decisions.

The EU AI Act emphasises transparency and accountability in AI deployment, requiring that developers and deployers of AI systems provide clear and accessible information about the capabilities, limitations, and potential biases of their AI applications.

For example, when users are interacting with AI systems like chatbots, they should be made aware of this so that they can decide whether they want to continue interacting with a machine, or stop. Also, content intending to inform the public on matters of public interest that has been AI-generated (including deep-faked audio and video content) must be clearly labelled as such.

Penalty regime

The EU AI Act introduces a penalty regime to enforce compliance with its provisions. Companies found to be in violation of the regulations may face significant fines, proportionate to the severity and impact of the infringement. Repeat offenders or those engaging in particularly egregious behaviour may face additional sanctions, including the suspension or revocation of their “C” certificate, effectively prohibiting them from marketing their AI products in the EU market.

Further details on penalties and enforcement of the AI Act are as follows:

- Up to 7% of global annual turnover or €35 million for prohibited AI violations

- Up to 3% of global annual turnover or €15 million for most other violations

- Up to 1.5% of global annual turnover or €7.5 m for supplying incorrect info

- Caps on fines for SMEs and Startups

- European ‘AI Office’ and ‘AI Board’ established centrally at the EU level

- Market surveillance authorities in EU countries to enforce the AI act

- Any individual can make complaints about non-compliance

A great step forward for AI regulation

The EU AI Act is set to completely transform the way that artificial intelligence is utilised and marks a significant step forward for ensuring that the development and use of AI poses as little risk as possible. The introduction of these requirements will surely bring about an increase in transparency, improved accountability, and a reduction in harmful practices, making AI safer than ever for deployers and consumers alike.

How can DPAS help?

To better understand AI, machine learning (ML), and the relationship that they have with data privacy, book onto our expert-led training course, AI for Data Protection Practitioners. Learn how to develop effective AI policies for your organisation, understand how to conduct an AI DPIA, and more.

Train with us for:

- Courses taught by renowned industry experts

- BCS and CPD accredited courses to sharpen up your CV

- Complex subjects made easy to understand

- A qualification that demonstrates your knowledge and expertise

Book a place with us today, or view our full course catalogue to see everything we offer.